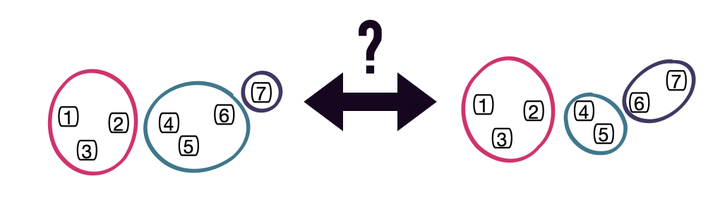

Comparing clusters.

Comparing clusters.

Clustering reveals the coarse-grained structure of data. However, to understand, evaluate, and leverage data clusterings, we need to quantitatively compare them. Clustering comparison forms the backbone of many tasks, including method evaluations, consensus clustering, and tracking the temporal evolution of clusters.

Take, for example, the evaluation of a clustering or community detection method. To determine if the method is recovering a known feature of the data, it is necessary to compare the method’s result to a planted reference (a.k.a. ground truth) clustering, assuming that the more similar the method’s solution is to the reference clustering, the better the method.

Despite the importance of clustering comparison, no consensus has been reached for a standardized assessment; many, many clustering similarity methods exist, and each similarity measure rewards and penalizes different criteria, sometimes producing contradictory conclusions. The situtation is even worse for generalized definitions of clusterings that include overlaps or hierarchy.

CluSim is a python package providing a unified library of over 20 clustering similarity measures for partitions, dendrograms, and overlapping clusterings.