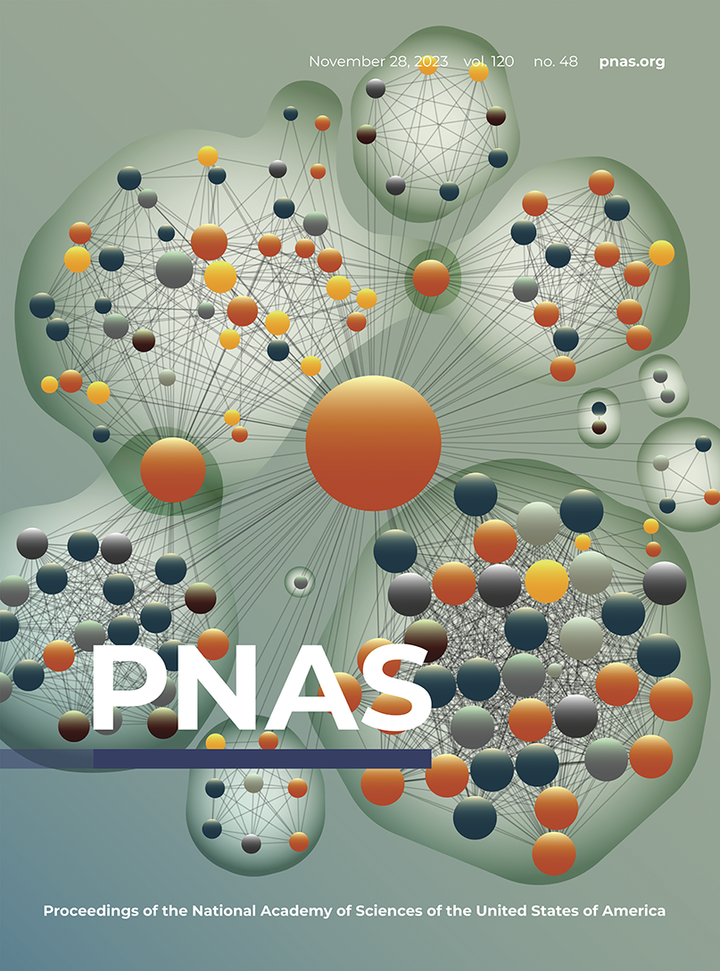

A network-based normalized impact measure reveals successful periods of scientific discovery across discipline

Abstract

The impact of a scientific publication is often measured by the number of citations it receives from the scientific community. However, citation count is susceptible to well-documented variations in citation practices across time and discipline, limiting our ability to compare different scientific achievements. Previous efforts to account for citation variations often rely on a priori discipline labels of papers, assuming that all papers in a discipline are identical in their subject matter. Here, we propose a network-based methodology to quantify the impact of an article by comparing it with locally comparable research, thereby eliminating the discipline label requirement. We show that the developed measure is not susceptible to discipline bias and follows a universal distribution for all articles published in different years, offering an unbiased indicator for impact across time and discipline. We then use the indicator to identify science-wide high impact research in the past half century and quantify its temporal production dynamics across disciplines, helping us identifying breakthroughs from diverse, smaller disciplines, such as geosciences, radiology, and optics, as opposed to citation-rich biomedical sciences. Our work provides insights into the evolution of science and paves a way for fair comparisons of the impact of diverse contributions across many fields.